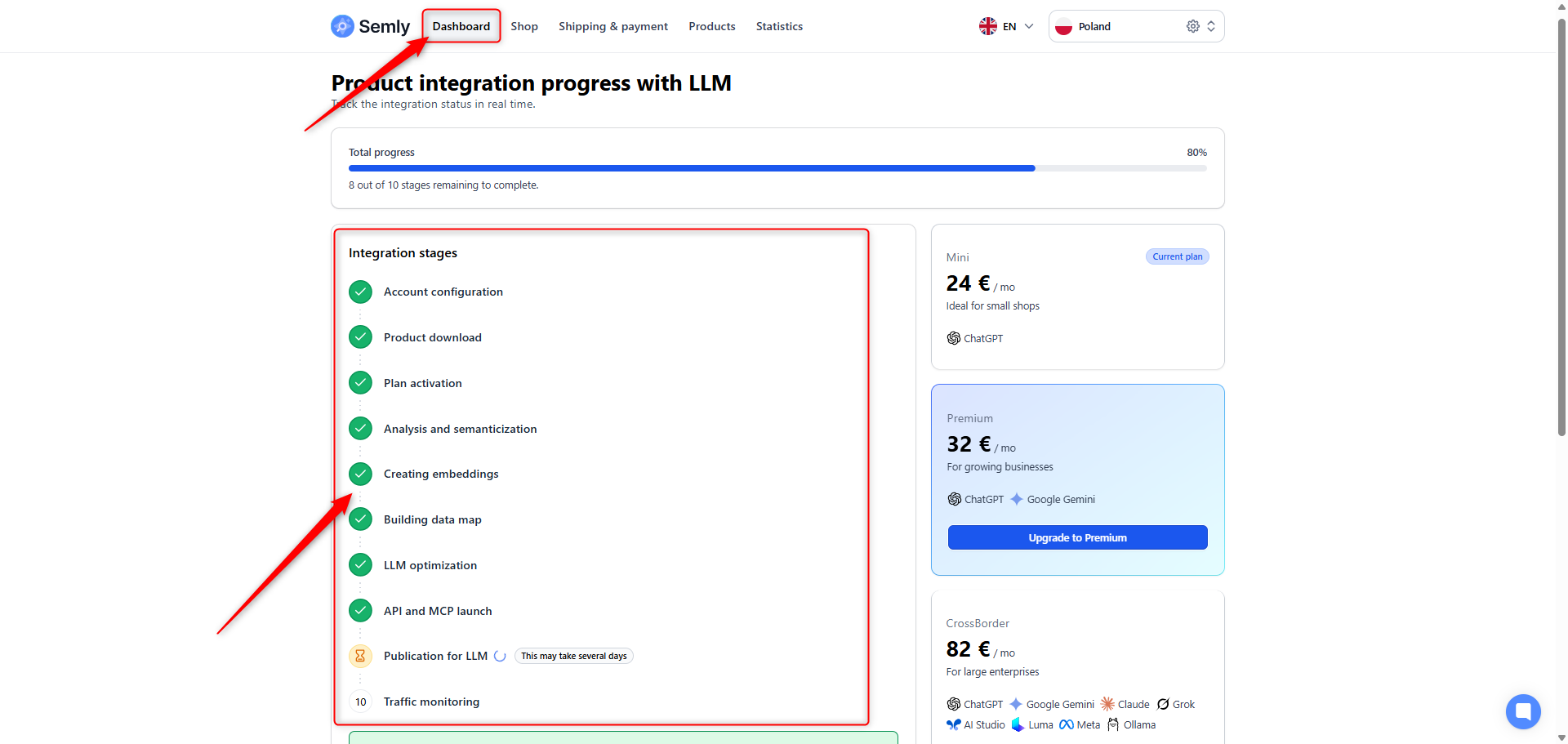

To get the best results, Semly runs an integration process that gets your offer ready to work smoothly with AI tools like ChatGPT, Gemini, and more.

Learn what each step of integrating your product feed with LLM models entails.

You've successfully set up your account in Semly and filled in basic info like contact details, company data, or language settings. That’s the foundation for starting the integration with LLM models.

The system has pulled your product data from the source file. Now we’re checking that all the basic info is correct and products are available.

You’ve activated the right subscription plan that lets you use LLM language models.

Your product data is analyzed and prepped to work with the LLM model. In this step, we assign meaning to product attributes, features, and descriptions – this is called semantization.

For each product we generate what’s called embeddings, or vector representations of the data. Thanks to them, the LLM model can understand and compare products by actually “getting” what they’re about.

We create a data map that groups info about your products—by categories, attributes, or relationships. This helps LLM models better analyze your offering.

The system automatically adapts your data to language model requirements—for example, it unifies formats and units, and removes unnecessary stuff.

In this step, we activate the internal API interfaces and the MCP system (Model Communication Protocol), which let your data connect directly with LLM models.

Your data gets shared with language models (like ChatGPT—according to your chosen subscription plan), and they can use it e.g. to generate descriptions, answer customer questions, or analyze shopping carts.

After you publish, the system keeps an eye on activity and how well the models interact with your offer – we check how effective the responses, generated content and conversions are, so we can keep optimizing the whole process.